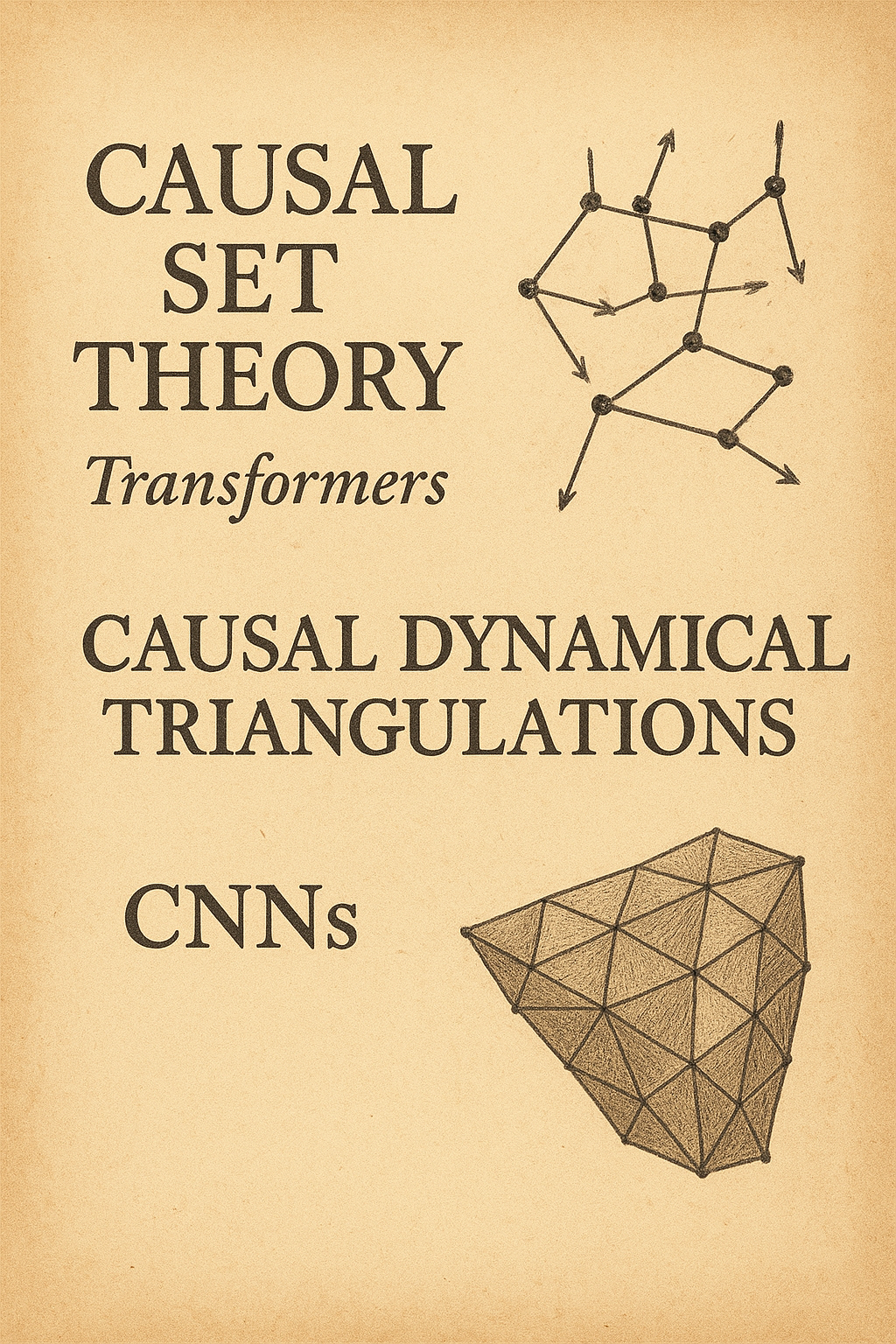

Two approaches to quantum gravity reveal fundamentally different conceptions of spacetime. Causal Set Theory (CST) posits that spacetime consists of discrete, indivisible events ordered solely by causality - geometry emerges from counting these atomic moments. Causal Dynamical Triangulations (CDT), conversely, builds spacetime from geometric simplices glued together, always targeting the smooth continuum limit through careful local construction.

This divide mirrors a central tension in machine learning architectures. Transformers process information as discrete tokens with global attention mechanisms - any element can directly influence any other, regardless of distance. CNNs build understanding through local convolutions, aggregating neighboring information layer by layer until global patterns emerge. The parallel isn't superficial: both pairs embody opposing strategies for handling structured information across dimensions.

The Technical Correspondence

In CST, the fundamental entity is the causal relation between discrete events. Distance and dimension are derived quantities - they emerge from the statistical properties of the causal ordering. Similarly, Transformers treat sequences as unordered sets with positional encodings; the model learns which relationships matter through attention weights computed across all pairs.

CDT maintains geometric locality at every step. Each triangulation respects causality through its foliation structure, and the path integral sums over geometries that preserve this local causal structure. CNNs likewise enforce locality through their convolution kernels, building invariances through hierarchical feature maps that respect spatial adjacency.

The mathematics reinforces this correspondence. CST's sprinkling process generates Poisson-distributed events in spacetime, much as Transformers sample from probability distributions over token vocabularies. CDT's Regge calculus approximates smooth curvature through deficit angles at simplicial hinges, analogous to how CNN pooling layers approximate continuous downsampling through discrete operations.

Implications for Information Processing

These architectural choices have profound consequences. CST's discrete structure naturally accommodates quantum superposition - events either are or aren't causally related. This binary clarity resembles how Transformer attention either includes or masks token pairs. The resulting systems excel at capturing long-range correlations but struggle with local smoothness.

CDT's emphasis on local triangulation ensures geometric consistency but complicates the incorporation of quantum fluctuations at arbitrary scales. CNNs share this trade-off: their local processing guarantees certain invariances but limits their ability to model genuine long-range dependencies without deep stacking.

The renormalization group flow in CDT - how physics changes across scales — finds its analog in CNN feature hierarchies. Early layers detect edges, later layers compose objects. Both systems build complexity through controlled information flow across scales, maintaining local coherence while constructing global meaning.

The Deeper Question

This parallel suggests something fundamental about how complex systems process information. Perhaps the discrete/continuous divide isn't merely technical but reflects two irreducible modes of organization. Discrete approaches privilege causality and correlation over metric structure. Continuous approaches privilege smooth deformation and local consistency over global reachability.

Consider how we actually experience time. Memory operates like Transformer attention - distant events suddenly become relevant, causality matters more than duration. Yet physical action unfolds through CNN-like local continuity - we cannot teleport, we must traverse space smoothly. Both modes coexist in our cognition.

The surprise isn't that physics and machine learning converged on similar architectures. It's that both fields, approaching from different angles, discovered the same fundamental tension: information can be organized by causal connection or spatial proximity, but rarely both simultaneously with equal fidelity.

Practical Consequences

For quantum gravity, this suggests neither CST nor CDT alone will suffice. The universe likely employs both strategies at different scales or in different regimes - discrete at the Planck scale, continuous at observable scales, with some mechanism managing the transition.

For AI systems, it explains why hybrid architectures increasingly dominate. Vision Transformers apply attention to CNN-extracted patches. Sparse Transformers impose local structure on attention patterns. The most capable systems combine both modes, switching between discrete and continuous processing as tasks demand.

The lesson extends beyond technical domains. Complex systems - biological, social, cognitive - likely require both organizational principles. Evolution operates through discrete mutations evaluated by continuous fitness landscapes. Markets move through discrete trades that approximate continuous price discovery. Understanding emerges from recognizing when each mode applies.

Rather than seeking unified frameworks that dissolve this tension, we might accept it as fundamental - not a problem to solve but a duality to navigate. Time itself, whether in physics or computation, might be precisely this negotiation between the discrete moment and the continuous flow.

#QuantumGravity #MachineLearning #PhysicsOfAI #ComputationalPhysics #TheoreticalCS